To Use AI, Lawyers Should Steal This From Software Developers

— 7 min read

Something remarkable has happened in software development over the past few years. Large language models have revolutionized how code gets written. It's estimated that by December 2024 thirty percent of Python code being made publicly available is AI-generated 1. But only a few years ago the amount of AI written code was essentially zero. It's been a remarkable change for the profession of software development.

And yet, if you look at other professions like lawyers, we're not seeing much adoption at all. Many lawyers I've spoken to have used ChatGPT occasionally, but have not made AI a central part of their work.

This disparity is puzzling. LLMs are fundamentally language models - they should be as transformative for legal writing as they are for code. Yet lawyers remain hesitant while programmers have embraced AI wholesale. Why?

I believe a part of the answer lies in a crucial difference: software has tests, legal documents don't.

The Verification Gap

When AI writes code, software developers have multiple safety nets:

- Immediate feedback: Code either compiles and runs, or it doesn't

- Systematic verification: Automated tests check that functions behave correctly

- Transparent execution: Debuggers let you step through exactly what the code does

When AI writes contracts, lawyers have... track changes and hope for the best.

This creates a fundamental problem for AI adoption: how do you know if AI-generated legal work is correct? Without systematic verification methods, lawyers can't confidently rely on AI assistance - the risk of undetected errors is simply too high.

In software, this question has a clear answer. Programmers figured out that LLMs are excellent at both writing code and writing tests for that code. Because tests can be reviewed by humans, they make it much easier to verify the correctness of AI-generated work. The tests act as a safety net that enables confidence in AI assistance.

Legal practice has no equivalent safety net. When an LLM helps draft a contract, correctness verification falls entirely on human review. And in law, correctness isn't just nice-to-have - hallucinations have already caused significant problems for lawyers, from fictitious case citations to missed statutory requirements.

This verification gap may explain in part why lawyers remain hesitant to adopt AI tools. It's not that they're technophobic or inherently conservative. It's that they lack the systematic verification infrastructure that makes AI adoption safe and practical.

What Would Legal Tests Look Like?

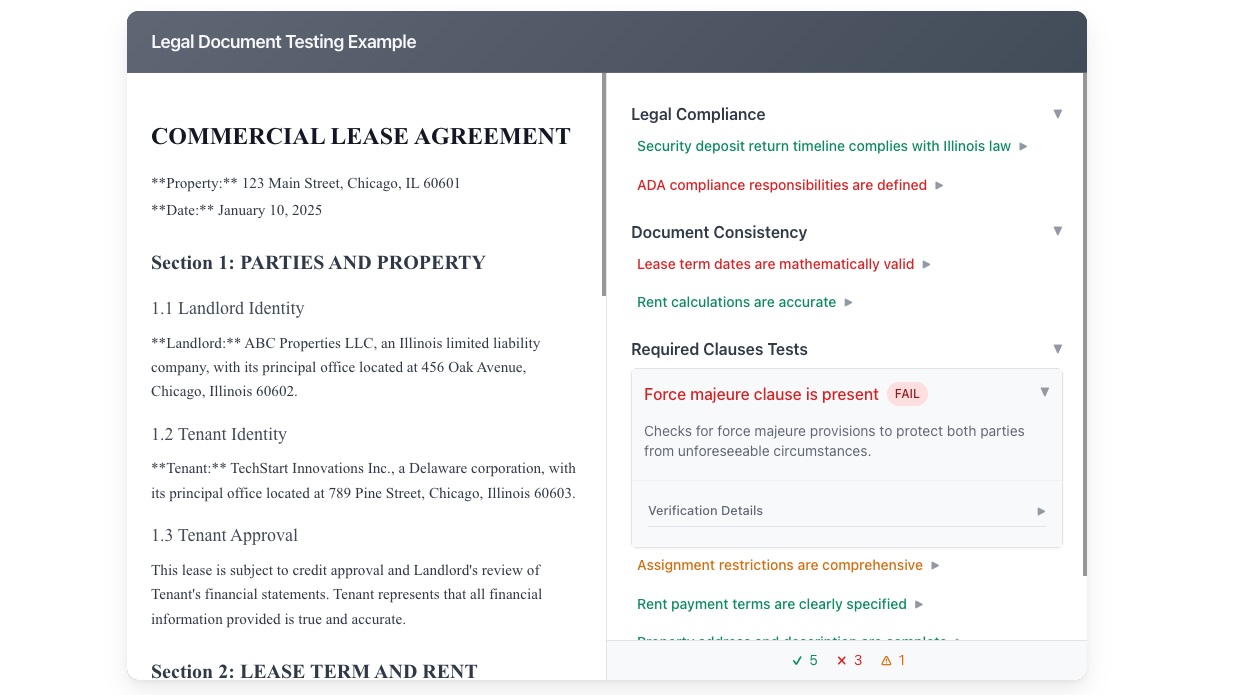

Legal Document Testing Example

COMMERCIAL LEASE AGREEMENT

**Property:** 123 Main Street, Chicago, IL 60601

**Date:** January 10, 2025

Section 1: PARTIES AND PROPERTY

1.1 Landlord Identity

**Landlord:** ABC Properties LLC, an Illinois limited liability company, with its principal office located at 456 Oak Avenue, Chicago, Illinois 60602.

1.2 Tenant Identity

**Tenant:** TechStart Innovations Inc., a Delaware corporation, with its principal office located at 789 Pine Street, Chicago, Illinois 60603.

1.3 Tenant Approval

This lease is subject to credit approval and Landlord's review of Tenant's financial statements. Tenant represents that all financial information provided is true and accurate.

Section 2: LEASE TERM AND RENT

2.1 Lease Term

The term of this lease shall commence on **January 1, 2025** and expire on **December 31, 2024**, unless sooner terminated in accordance with the terms hereof.

2.2 Base Rent

Tenant shall pay base rent as follows:

All rent payments are due on the first day of each month, without demand, deduction, or set-off.

Section 3: SECURITY DEPOSIT AND ADDITIONAL CHARGES

3.1 Security Deposit Amount

Upon execution of this lease, Tenant shall deposit with Landlord the sum of **$7,500.00** as security for the faithful performance of all terms and conditions of this lease.

3.2 Security Deposit Return

The security deposit shall be returned to Tenant within **thirty (30) days** after termination of this lease, less any amounts applied toward unpaid rent, damages, or other charges for which Tenant is responsible under this lease.

Section 4: USE AND RESTRICTIONS

4.1 Permitted Use

The premises shall be used solely for general office purposes and software development activities, and for no other purpose without Landlord's prior written consent.

4.2 Assignment Limitations

Tenant may not assign this lease or any interest herein without prior written consent of Landlord. Any attempted assignment without such consent shall be void and shall constitute a material breach of this lease.

Legal Compliance

Verifies that accessibility compliance responsibilities are clearly allocated between landlord and tenant per Americans with Disabilities Act requirements.

Verification Details

Document Consistency

Required Clauses Tests

The interface above shows what legal document testing could look like in practice. Try it out. You can browse the tests, view references to the document in the test and more. On the left is a commercial lease agreement. On the right is a systematic test suite that automatically verifies the document against legal requirements, internal consistency checks, and standard clause requirements.

Notice how each test provides transparent verification. The passing "Security deposit return timeline complies with Illinois law" test shows exactly what statute was checked and which document sections were analyzed. The failing "Lease term dates are mathematically valid" test reveals a critical error - the lease ends before it begins - with a clear execution trace showing how the system detected this impossibility.

A powerful thing about tests: write tests once, and you can apply them to every contract of that type.

That hallucinated case citation? Test catches it by verifying against legal databases. Violates state statutory requirements? Test catches it by checking current law. Wrong client entity details? Test catches it by cross-referencing your records.

This is the same principle that makes software testing transformative—you create verification infrastructure that scales with your work, not against it.

How Might a Legal Test Actually Work?

Let's demystify this with a simple example: checking if a security deposit clause exists. Let's start with a hypothetical specification of a test:

The Test Specification:

Test: "Security deposit clause is present"Type: Requirements CheckRequirements: 1. Document specifies a security deposit amount 2. Document explains what the deposit secures 3. Document states when deposit will be returnedWhen the system runs the test, it can provide the test as a prompt to an AI agent that can use tools, read documents, and do research. For example, the system could send this prompt to an AI model:

"Review this lease document. For each requirement below, find specific text that satisfies it and cite the exact location. If a requirement isn't met, explain what's missing:

- Document specifies a security deposit amount

- Document explains what the deposit secures

- Document states when deposit will be returned"

The AI response can then systematically address the requirements of the test determining whether each requirement is met or not, and providing evidence and reasoning for each determination. Here is an example AI output for the test we've shown:

Requirement 1: ✅ SATISFIEDLocation: Section 3.1Text: "Tenant shall deposit with Landlord the sum of $7,500.00"Reasoning: This clause clearly specifies a dollar amount ($7,500.00) that constitutes the security deposit.

Requirement 2: ✅ SATISFIED Location: Section 3.1Text: "as security for the faithful performance of all terms and conditions"Reasoning: This explains the purpose of the deposit - to secure tenant's performance of lease obligations.

Requirement 3: ✅ SATISFIEDLocation: Section 3.2 Text: "shall be returned to Tenant within thirty (30) days after termination"Reasoning: This establishes a specific timeline (30 days) for when the deposit must be returned to the tenant.As you can see, this output is structured and includes key information for assessing the reliability of the AI's work on the test. The AI model has provided citations for each requirement, and logic explaining how the text satisfies the requirement. Our interface above structures this output into a easily reviewable determination (PASS or FAIL) while also providing an auditable trace that a user can drill down into to verify the test. This goes beyond a black-box "this looks good" and when the AI fails it is possible to have a better understanding of why.

The Vision

Software developers didn't adopt AI because they're risk-takers. They adopted it because they have systematic ways to verify correctness.

Lawyers need tools that are just as powerful: Legal document tests that provide that same verification infrastructure for lawyers, including systematic verification of legal compliance, internal consistency, and client needs. Transparent execution traces that can show the attorney user exactly what was checked and how. Reusable test specifications that can improve document quality across an entire practice.

In short, legal documents are like legal software—they define behavior, specify conditions, and create consequences when executed in the real world through legal systems. And like software, legal documents deserve tests.

Thoughts on legal document testing? I'd love to hear from lawyers and developers alike. Find me on X @niemerg.