Introducing Sudoku as a Fine-Tuning Task

Introduction

With the launch of chatGPT, AI has become the hottest topic in tech and people are working to integrate it into virtually everything. But, despite this fervor, there is a deficit of content including a practical exploration of AI tools that deepens developers' comprehension of Large Language Models (LLMs).

So I set out to try to learn LLMs myself by focusing on a problem I thought was interesting and potentially valuable for understanding LLMs: sudoku. Sudoku is a useful way to probe into the reasoning processes of LLMs, offering a simple, yet meaningful and fun, metric for assessing their reasoning abilities compared to the typical mathematical puzzles. I've documented my findings and insights in a series of articles, choosing 4x4 sudoku puzzles as a testing ground for their simplicity and accessibility. I invite you to join me in this exploration, where we'll not only challenge LLMs but also sharpen our own understanding of these remarkable tools.

I have several goals for this series. First, I’d like us to develop a sense of how well models can reason on simple tasks. Second, I’d like us to learn how to improve models on a desired task, with a focus on improving the reasoning of a model on a desired task. As a part of that, I’d like us to learn more about evaluating a model and looking at what the model does to better understand its strengths and weaknesses and how and why it fails. Finally, I’d like for us to take a look at tools and techniques we can use, such as prompting improvements and frameworks for prompting, to see if we can improve the performance of the model on the task. After achieving these goals, I believe we will have developed an intuition of how LLMs perform and how they can be improved.

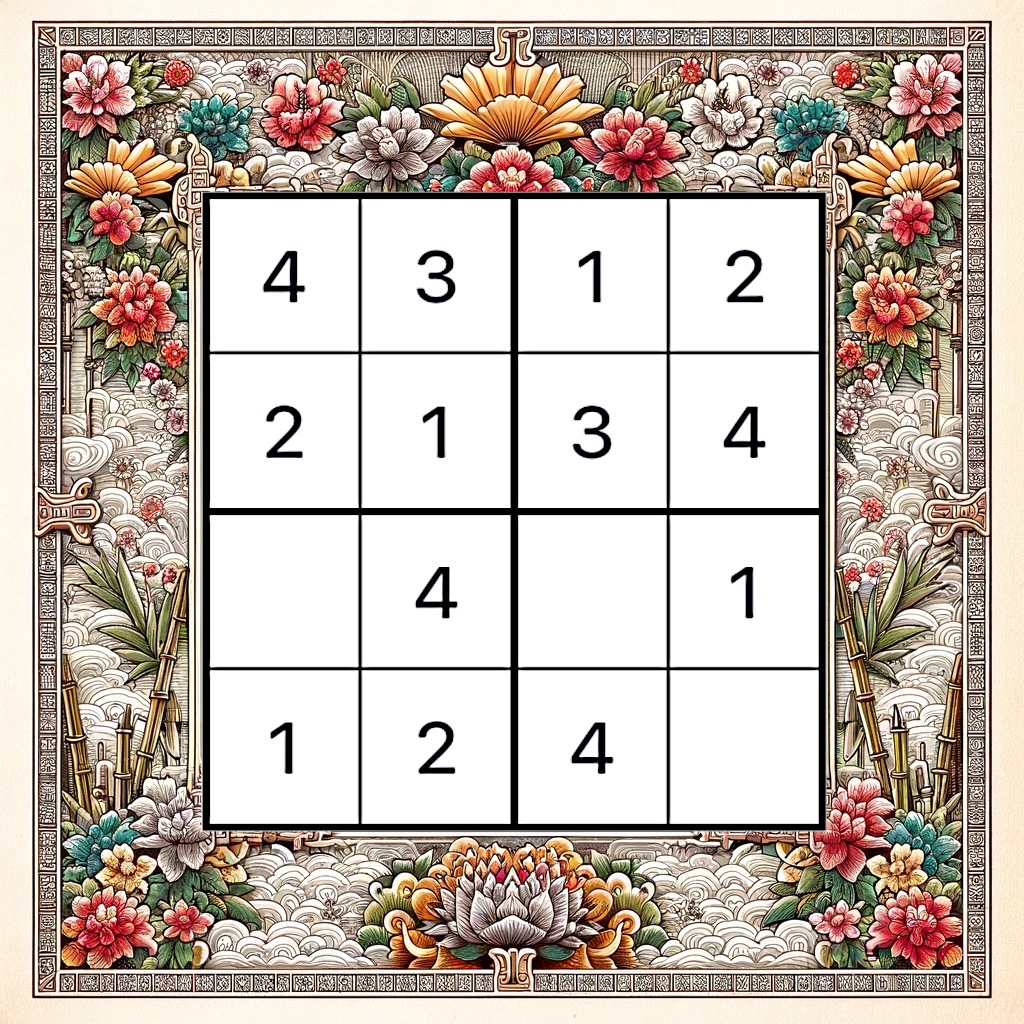

What is 4x4 Sudoku?

Let's talk about sudoku. Sudoku is a well-known number-placement puzzle that typically uses a 9x9 grid. However, for our project, we'll be focusing on a simpler 4x4 variant. This version uses a grid divided into four 2x2 subgrids, where the challenge is to fill each row, column, and subgrid with numbers from 1 to 4. I've created a playable version for you to give it a shot. To play, just select a cell and enter a number. If it is incorrect, the number will be displayed in red, if correct, it will display in black.

The scaled-down 4x4 version offers a perfect balance between complexity and solvability, ideal for us to easily understand how the model is reasoning about a puzzle. Specifically, sudoku requires logical reasoning and understanding spatial arrangements, making it an interesting and fun challenge for a language model. Our choice of 4x4 Sudoku allows us to create ample puzzles and straightforwardly evaluate solutions, providing an excellent framework for training and analysis. It will also be easy for us to review solutions created by the models, making it easier to evaluate what the models are doing and how they are performing.

The Sudoku Challenge

To follow along with this tutorial, check out Sudoku-Basics.ipynb in the Sudoku Repo.

Our focus is on solving a single cell within a 4x4 Sudoku puzzle, a task that, once mastered, allows for the iterative solution of any puzzle. Consider the following example puzzle:

| 3 | 2 | ||

| 1 | |||

| 2 | 1 | 4 |

We can represent the puzzle as a list of python lists:

puzzle = [[0, 0, 0, 0], [0, 0, 3, 2], [1, 0, 0, 0], [2, 0, 1, 4]]This is the representation we will use to represent our puzzle in our code, but also when presenting the puzzle to the language models.

In this puzzle, many cells are unsolved. We will ask the language model to analyze the puzzle and solve one cell at a time, based on the information available. Solving a full puzzle would then be a process of iteratively solving one cell at a time until the full puzzle is solved.

Constructing the Prompt

The key to guiding the model is in the construction of the prompt. In the following prompt, we ask the model to act as a sudoku tutor to analyze the puzzle, solve a cell, and explain its reasoning in natural language. This not only seeks a solution but also sheds light on the model's thought process. Here is an example of how the prompt is structured:

We are working on the following sudoku puzzle (each sub-list represents a row): [puzzle]

You are a sudoku tutor. Create a brief analysis that finds an unsolved cell and solves it...

Now we can use that prompt to see if GPT-3.5 can analyze the puzzle and solve a cell. We format the prompt to add our puzzle and then call make a call to OpenAI:

model_name = "gpt-3.5-turbo-1106"model = ChatOpenAI(model=model_name)prompt = brief_analysis.format(puzzle)message = model.invoke([ HumanMessage(content=prompt)])print(message.content)GPT-3.5 will output something like:

The row with the most solved cells is row 3 with numbers: 3 and 1. Because each row must contain the digits 1-4, the unsolved cell must be 2. Therefore row 3, column 2 is the number 2.

Validating the Solution

To extract and validate this solution, let's use a function that calls GPT-4 to turn the analysis into a proposed solution and displays the result:

extraction_model = ChatOpenAI(model="gpt-4-0613")proposed = analysis_to_puzzle_solution(extraction_model, puzzle, message.content)display_sudoku_comparison(proposed, puzzle) if is_proposed_solution_valid(puzzle, solution, proposed): print("Correct!")else: print("Incorrect!")In this case, the model is incorrect, so we will see something like:

| 2 | 3 | 2 | |

| 1 | |||

| 2 | 1 | 4 |

Incorrect!

Better luck next time, GPT-3.5!

Ok, to summarise: We are using sudoku as a lens to evaluate LLMs. We have looked at why we have chosen 4x4 sudoku, constructed a prompt to have a model solve a cell in sudoku, and evaluated the model’s answer. So, now that we've gotten the model to try to solve a cell in a sudoku puzzle (albeit, unsuccessfully), we will evaluate some models on the task in the next article.